Three Core Considerations for AI-Generated Content in Higher Ed

AI has the potential to completely reshape how we create content. Here are three core considerations higher ed marketers need to be aware of.

I recently read a great blog post by Carrie Phillips, Chief Communications and Marketing Officer at University of Arkansas at Little Rock (an OHO client!), reflecting on ChatGPT, the latest in a string of AI tools to sweep social media feeds by storm.

This particular tool is a conversational chatbot, powered by OpenAI. It can generate comprehensive and informative copy in response to a wide range of queries in a matter of seconds. Given how time- and resource-intensive copywriting can be, this sounds too good to be true.

In her article, Phillips notes the promise of this technology — as well as the inevitability that this will impact marketing jobs in some fashion, whether we want it to or not — while also offering three wise best practices:

- AI is a tool, not a solution

- It doesn’t eliminate the need for strategy to guide the copy

- It should be used sparingly, so as not to position it as a replacement for talented writers or marketers.

I am grateful to Phillips for getting the conversation started on what is sure to be an increasing influence on the way we do our jobs as communicators and marketers. As I reflected on this topic, I thought of a few additional questions that higher ed marketers should consider when thinking about how — or if — to integrate this tool into their workflows. (And where appropriate, I’ve asked ChatGPT itself to weigh in and provide additional comments.)

Can AI Capture Your Brand Voice?

As Phillips and others have noted, the copy generated by tools like ChatGPT may be technically fine in terms of grammar, syntax, spelling, and so on. (Though as marketing expert C.C. Chapman observed, sometimes it assembles the right pieces of information in the wrong order or context.) But this is the bare minimum of what we should expect from public-facing content. Beyond that, the copy is extremely generic, devoid of any authentic brand voice, and often long-winded and repetitive.

But maybe that’s fine? Could we just have ChatGPT write all the rote content that tells us how to get to the admissions office coming on the highway from the north, or what the discipline of anthropology entails? That would surely be a relief to overworked marketing offices.

The answer is… maybe, maybe not. Directions, sure. (Though why not just link to Google Maps?) And while ChatGPT could easily spit out the definition of anthropology or economics or any other discipline — such as we often see leading off academic program pages — that would just be more efficiently perpetuating the chronic misstep of publishing generic content that fails to distinguish the institution or address actual user needs. That’s not to say relevant use cases don’t exist, but even those must be chosen with care.

Having clear directions is important, but the content that really moves the needle for us is our most brand-aligned and audience-centric — when we are communicating our truths in a way that honors the truths of our audience. Margot Bloomstein, a brand and content strategist who authored the 2021 book “Trustworthy: How the Smartest Brands Beat Cynicism and Bridge the Trust Gap,” wisely observes:

If we use AI to create the routine and mundane content, good communicators need to be even better to create the nuanced, brand-appropriate, interesting content. Delight defies algorithms — but that's the challenge and opportunity I see more and more in #aicontent.

Delight — when executed with restraint and precision — can transform a perfunctory user experience into a memorable one. This is where the true value of experienced marketers and copywriters shines brightest, and where AI’s limitations are most apparent.

When asked if it can write to a brand voice or style, ChatGPT says that it can write copy consistent with a specific voice or style given the appropriate input. However, it adds:

I do not have access to any proprietary brand information or individual data, and I generate responses based on the input provided to me and the knowledge I have been trained on. It is up to the user to ensure that the text I generate aligns with their desired brand voice or style.

Perhaps a tool like Yext — which effectively creates a mini-AI fueled by your own content, and is able to be leveraged for customer support, optimized search, and other uses — will address these issues, forming a walled garden in which a more brand-aligned AI can flourish. Time will tell.

Can AI Account for your Diversity, Equity, and Inclusion Priorities?

The problem of algorithmic bias has been very extensively documented. Basically, any system can only work with the input it receives. And in many cases, when the system is designed by individuals with a relatively homogenous set of backgrounds, values, and experiences, the output will correspondingly reflect the biases of its designers. This has led to many instances of sexism, racism, and other cultural gaps and missteps coming through in AI products.

Enter higher ed, with its renewed commitment to diversity, equity, and inclusion. It has been so heartening to see this topic come to the fore at so many colleges and universities. That’s why it is particularly concerning to consider how leaning on AI-generated content could detract from these efforts.

After all, how could a tool like ChatGPT know if I am writing for an audience that is neurodiverse, or for whom English is a second language? How can I be certain that the copy generated by ChatGPT does not reflect any biases that signal to a prospective student that they would not be welcome on campus?

All content — whether it is created by a robot, an intern, or an accomplished marketing leader — should be crafted and reviewed carefully to be sure it authentically reflects your institutional diversity and adheres to best practices for inclusive content. Since those understandings can shift over time, all the more reason to ensure that content stays in the hands of skilled writers and editors. These responsibilities are too important to delegate.

AI ethics advisor Elizabeth M. Adams has shared several leadership guides based on her research and emphasizes engaging diverse stakeholders in discussions about AI and the development of recommendations around responsible applications — echoing the adage “nothing about us without us.”

As higher ed reckons with AI, the reading list linked above should be a trusty companion on the journey.

Can AI Consider Audience Context?

I gave my friend ChatGPT a test to gauge how it could, off the cuff, tailor its response to a given audience context.

Write about upcoming financial aid deadlines to somebody who is very nervous about getting a scholarship

If you're someone who is very nervous about getting a scholarship, you're not alone. Many students feel the same way, and it's important to remember that there are many financial aid options available to help you pay for college. One important thing to keep in mind is that there are deadlines for applying for financial aid, so it's important to make sure you're aware of them. [...]

Write about upcoming financial aid deadlines to somebody who has repeatedly missed and forgotten about deadlines

If you're someone who has repeatedly missed and forgotten about financial aid deadlines, it's important to take steps to improve your situation. Missing deadlines can have serious consequences, as it can prevent you from getting the financial aid you need to pay for college. [...]

Write about upcoming financial aid deadlines to somebody who loves chocolate ice cream

If you're someone who loves chocolate ice cream, you're in good company. Many people enjoy the rich, creamy flavor of chocolate ice cream, and it's a delicious treat that can be enjoyed in many different ways. But while you're enjoying your favorite flavor of ice cream, it's important to remember that there are also financial aid deadlines coming up that you need to be aware of. [...]

With the first two queries, I was attempting to coax ChatGPT to adjust tone for a defined audience context — with the third, I just wanted to see how it would respond to the absurd. But all three responses sound remarkably similar and templated — vaguely supportive, not particularly human, yet keenly aware of the distracting qualities of chocolate ice cream.

Considering the context in which your audience is approaching your information — what they need, what they’re feeling, what their next step should be — is critical to communicating effectively. As Phillips indicated, this is where strategy becomes essential — and a user-centric strategy should always anchor your communications efforts.

Colleges and universities devote significant resources to market research, user testing, and other ways to better understand their diverse audiences — the value of those insights is irreplaceable and must be retained.

Artificial Intelligence is Here to Stay — Now What?

There is no putting pandora back in the box — AI is here for good. Chatbots, voice search, and other services have already leveraged its capabilities successfully. Tools like ChatGPT may even one day upend the traditional search engine model.

So how do we continue to use this technology responsibly as it becomes increasingly able to not just surface existing information but generate entirely new content?

One thought is that maybe AI’s role will be to provide a solid (if generic) foundation upon which to layer our brand narrative and user context. In an online content strategy forum, I noted some people describing ChatGPT almost like a writing partner, providing a starting point from which to refine and optimize copy. You could consider a design approach or logo idea generated by Midjourney or OpenAI’s DALL-E.

University of Florida’s Todd Sanders — in a LinkedIn post written with the aid of ChatGPT — champions the potential of tools like ChatGPT to aid in idea generation and refinement. (He also set a 2023 professional goal of “Include #AI (text/image generation) as an active participant in every brainstorming session.”)

There’s really no other option — Google has already stated that it can detect AI-generated copy and flags it as spam.

In her newsletter, content expert and author Ann Handley wrote:

You can't put AI in the hands of someone who can't write and expect anything other than mediocre. And you can't expect AI to ever disrupt your own writing —to call you to a higher place creatively — without a little gung-ho and gusto of your own.

Bloomstein makes the point that our bar for what “quality” content is needs to be raised. This makes sense, because content is more than just information. There are so many layers that factor into the creation of quality content — brand, audience, goals, taxonomy, SEO, readability, inclusion, to name a few. (Concerned about unscrupulous freelancers passing off AI-generated content as their own? You’re in luck — checker tools already exist.)

Can it motivate? Enlighten? Sell? Convert? Entertain? Change hearts and minds? Quality content can — and must — do these things.

AI can be a tool used in the pursuit of this quality, but it must be wielded with caution and restraint. At the end of the day, it will be you — the marketer — who ultimately determines whether the content is getting the job done.

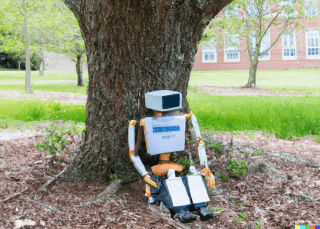

Main image generated by DALL-E with the prompt "artificial intelligence sitting under a tree on a college campus"